3. Vision and Goals

aevatar.ai is designed to support the development and deployment of AI agents while resolving the above challenges. It leverages a combination of open architecture, powerful orchestration capabilities, and cloud-native deployment to create a robust, scalable, and efficient system.

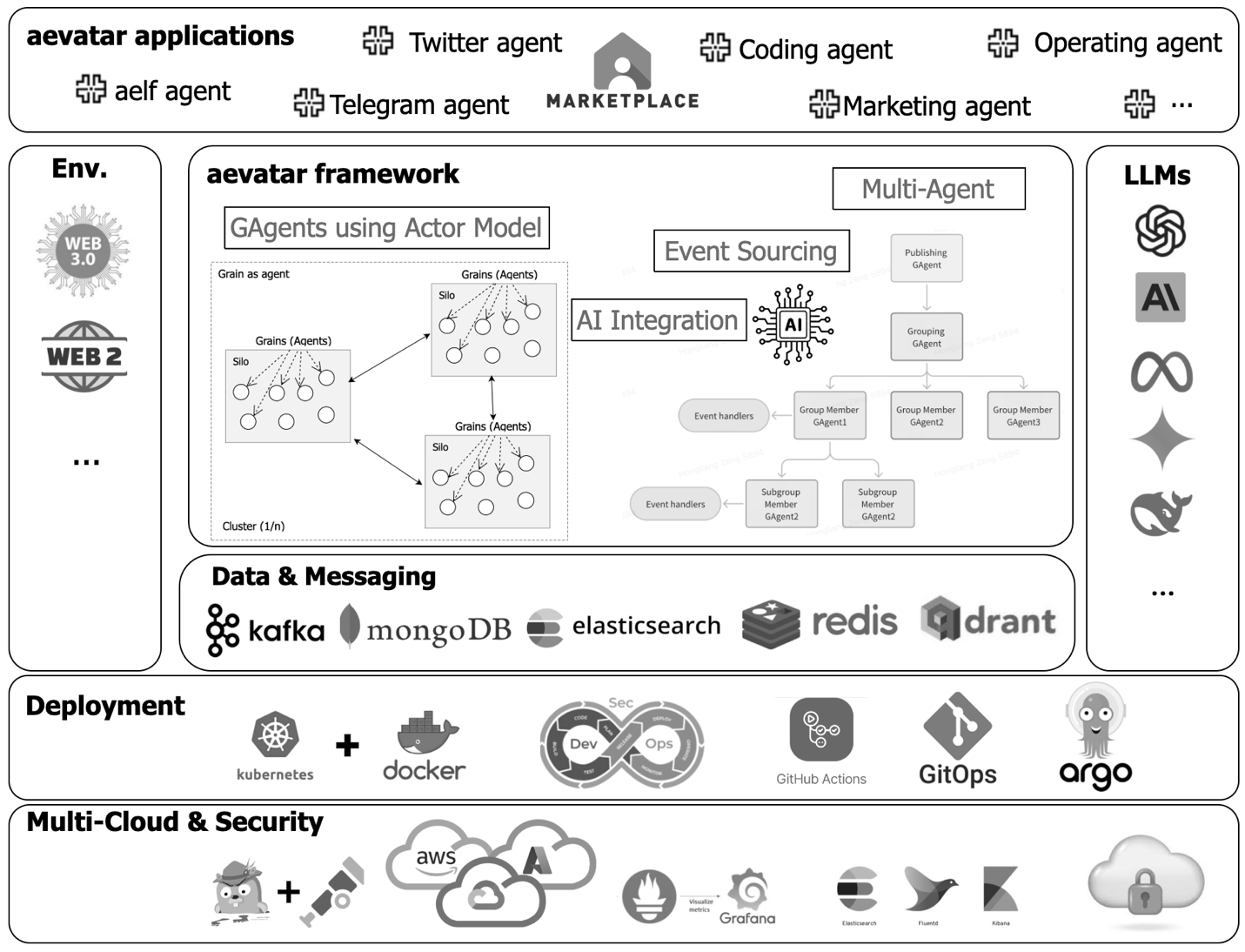

3.1 aevatar Framework

aevatar framework represents the core of aevatar.ai and enables it to handle essential processing logic, agent interactions, and core components.

Orleans “Grains” as Agents

- Orleans uses the concept of grains—lightweight, isolated micro‐objects—to represent actors or “agents.”

- Clusters of silos (the runtime hosts in Orleans) coordinate these grains so they can be distributed and scaled across many servers.

- In this architecture, each grain is effectively an agent (often shown as “GAgent”).

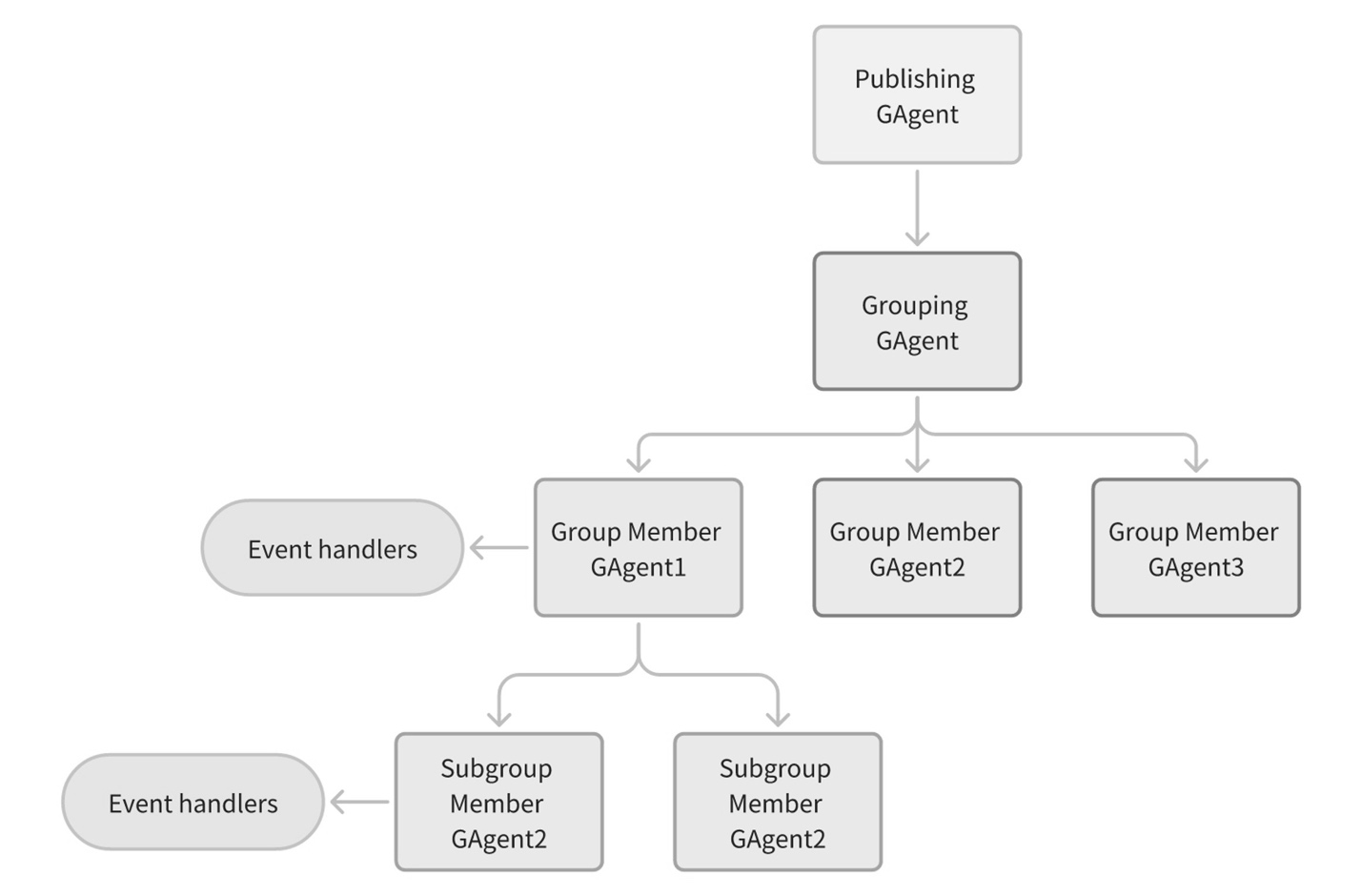

Multi‐Agent

- The diagram shows multi‐layered agent groupings. For instance, a “Publishing GAgent” coordinate several “Group Member GAgent” instances.

- Event handlers manage asynchronous triggers or state changes, enabling agents to respond in real-time to inbound data or updates from other agents.

AI Integration

- Semantic Kernel provides advanced AI orchestration and prompt‐chaining capabilities.

By combining all the above, the system can scale out large numbers of AI agents, each with specialised tasks, while also coordinating them in groups or sub‐groups to accomplish more complex, collaborative goals.

3.2 aevatar Applications

- Marketplace: A centralised platform where various AI agents can be discovered, developed, managed, and deployed.

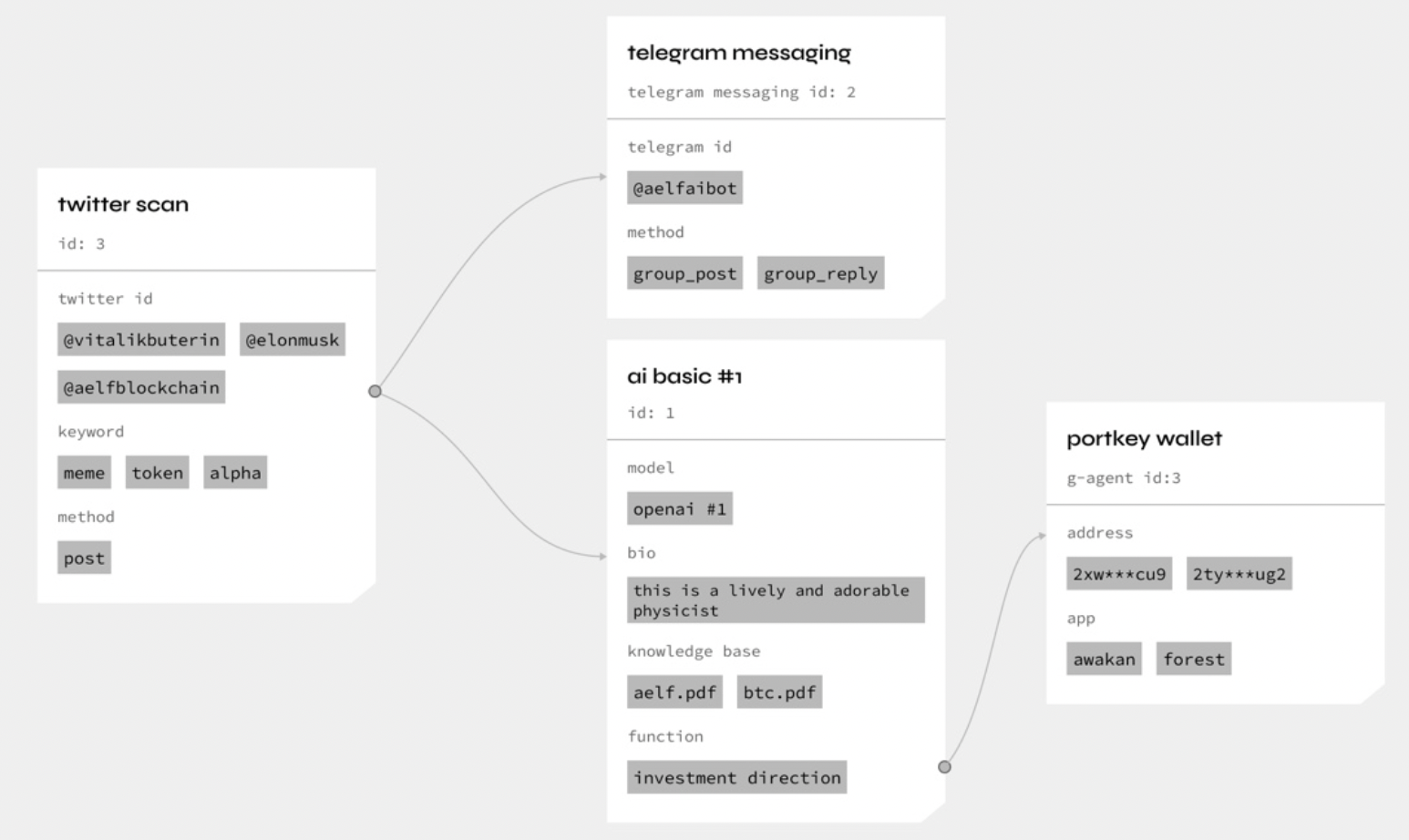

- Agents: Individual AI agents that perform specific tasks or functions. These agents can be developed and deployed independently.

- Webhook: aevatar can seamlessly orchestrate a large number of external inputs, converting real‐world triggers into structured events that G‐agents can handle, thus enabling continuous, real‐time interaction with a variety of external systems.

Example Agents:

- Twitter Agent: Monitors tweets, posts updates, or interacts with Twitter.

- Telegram Agent: Works with Telegram for chat interactions.

- Coding Agent: Helps generate or review code.

- Marketing Agent: Performs marketing tasks such as campaign management.

- Operating Agent: Handles operational tasks.

- aelf Agent: Leverages aelf on-chain, off-chain data to perform automated tasks.

These represent end‐user‐facing “products” built on top of the underlying multi‐agent framework. Each of these agents can have specialised logic, connect to external APIs, and leverage the core aevatar engine.

3.3 Environment (Web 2 / Web 3)

This indicates the broader context in which aevatar agents operate—both in traditional Web 2.0 environments (e.g., REST APIs, SaaS services) and Web 3.0 contexts (e.g., blockchain or decentralised services). The framework is designed to plug into these ecosystems seamlessly.

3.4 LLMs Integration

On the right side of the diagram, you see major LLM (Large Language Model) providers:

- OpenAI / ChatGPT

- Anthropic

- Meta

- Azure OpenAI

- Deepseek

- And more…

These LLMs are integrated through the Semantic Kernel connectors, so each agent can leverage natural language understanding, generation, and advanced reasoning.

3.5 Data & Messaging

Above the framework, we see core data and messaging technologies:

- Kafka: Real‐time messaging and event streaming

- MongoDB: Document‐based or general data storage

- Elasticsearch: Full‐text search and analytics at scale

- Redis: In‐memory data store for caching and high‐speed access

- Qdrant: Referring to specialised vector stores

These technologies enable high‐throughput data ingestion, search, caching, and state management—critical for large‐scale agent interactions.

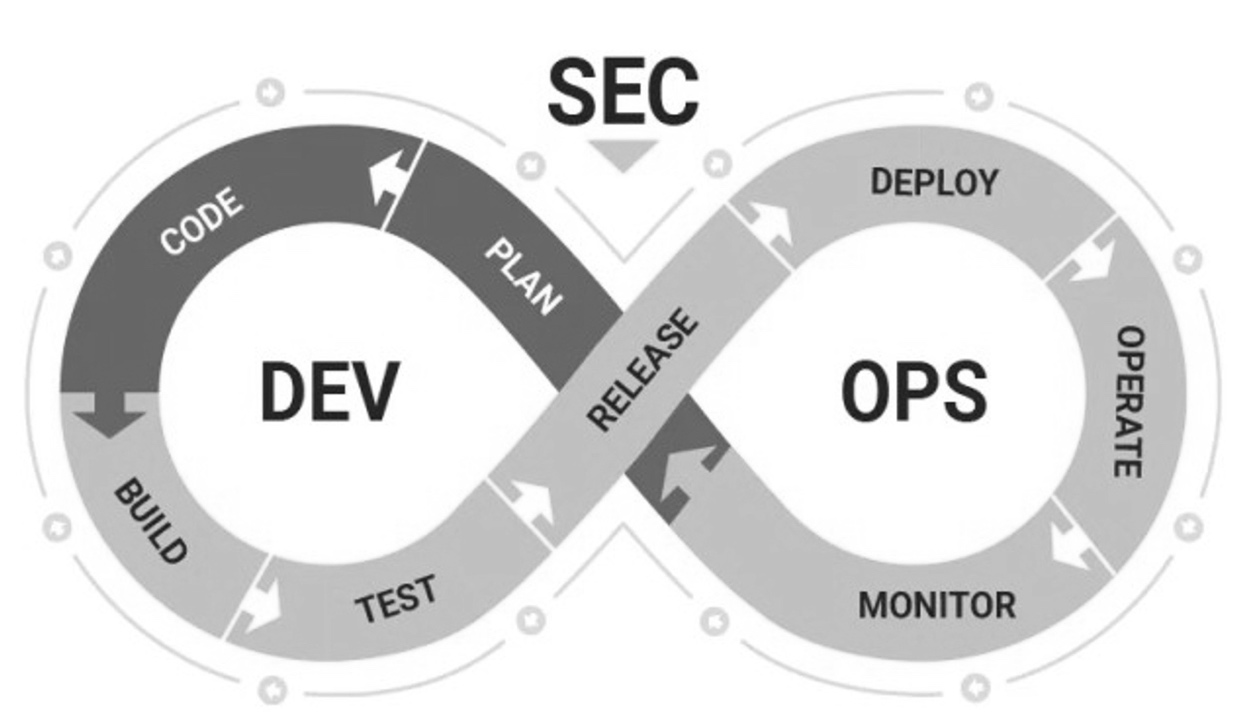

3.6 Deployment & DevSecOps

DevSecOps used to build, deploy, and manage the aevatar framework:

- Kubernetes + Docker: Containerisation and orchestration across clusters.

- GitHub Actions, GitOps, Argo: CI/CD pipelines and “GitOps” ‐ style deployment for automated, versioned releases.

- The “DevSecOps” loop highlights security‐focused continuous integration/continuous deployment practices.

3.7 Multi‐Cloud & Security

Finally, there is a multi‐cloud strategy across:

- Google Cloud Platform, Amazon Web Services, Microsoft's Azure and others – Cloud providers are supported for deployment.

- Additional tooling for security and observability, such as Grafana (monitoring dashboards), Vault (secrets management), Elasticsearch/Fluentd/Kibana (EFK stack for logs and analytics), and so on.

This ensures the platform can run in a secure, fault‐tolerant, and cost‐efficient way across different cloud infrastructures.

3.8 Putting It All Together

- Each aevatar application (like a Twitter Agent or Coding Agent) is an Orleans “grain” (or set of grains) wrapped in specialised logic.

- The Multi‐Agent or “grouping” approach coordinates large collections of these grains, allowing them to pass events/messages among themselves through Kafka, Redis, or direct Orleans messaging.

- Semantic Kernel helps orchestrate more advanced AI reasoning, prompt chaining, and memory/knowledge.

- The entire setup is packaged for cloud deployment (Kubernetes + Docker) and integrated with logging, security, and monitoring solutions (Grafana, Vault, EFK).

- This combination provides a scalable, fault‐tolerant, and highly extensible platform to run AI agents across multiple domains—Web 2 and Web 3, on multiple clouds, with robust security and observability.

In short, aevatar.ai is a full‐stack, cloud‐native, multi‐agent orchestration framework that leverages Orleans for actor‐based scaling, integrates with Semantic Kernel for AI functionality, and utilises a comprehensive DevSecOps pipeline plus multi‐cloud deployment strategy.

3.9 aevatar Advantages

- Multi-Agent Collaboration

Through a distributed actor model (based on Orleans) and multi-agent management mechanism, aevatar.ai achieves efficient interconnection and complex event scheduling between multiple AI agents, supporting cross-platform and cross-scenario collaborative workflows.

aevatar.ai’s multi-agent framework divides AI agents into different functional roles. It assigns specific responsibilities and groups multiple agents to ply various niches within a system, thereby completing user-assigned tasks from a systemic perspective.

- Unified Cross-Model Collaboration

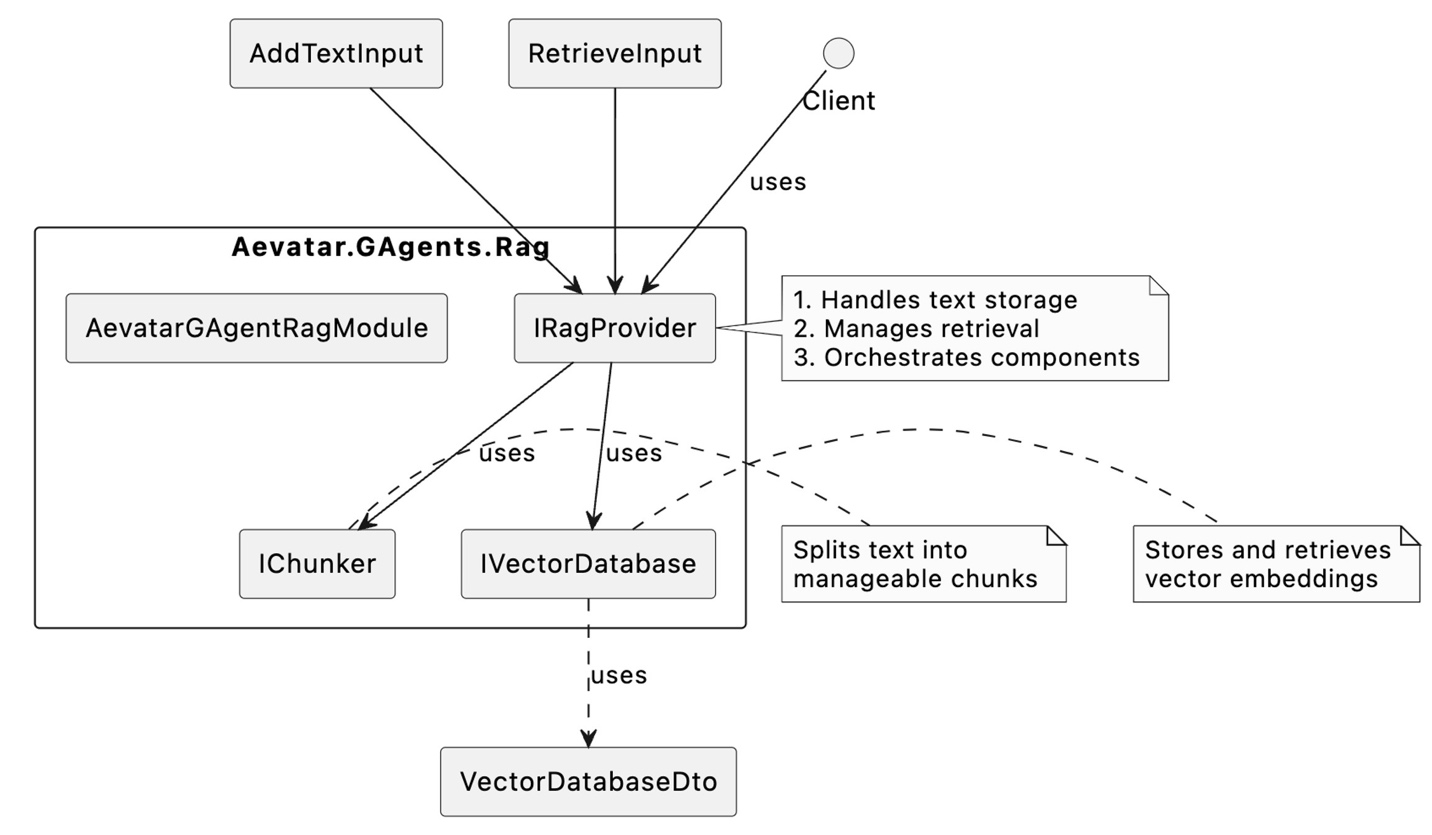

aevatar.ai provides a multi-language model parallel agent framework. This overcomes the limitations of single models and supports free switching or parallel use of multiple models in different tasks, thereby enhancing system flexibility and performance. 3. Multi-Agent RAG Architecture

Under the multi-agent RAG architecture, each AI agent represents a customised RAG based on specific knowledge bases, retrieval strategies, and generation configurations; this provides answers in the entire system's most proficient domain.

Through the orchestrator, user questions are allocated to appropriate agents. Multiple agents can also be called in parallel, and answers are consolidated through the information integration module. This achieves a more professional, comprehensive, and scalable question-answering or information-generation system.

The multi-agent RAG model enables:

- Flexible expansion: Quick deployment of new agents based on different business lines or knowledge domains.

- Noise reduction: Utilising domain-specific knowledge bases to reduce irrelevant information interference.

- Enhanced credibility: Cross-verification between multiple agents.

- Sustainability: Independent maintenance of each agent's knowledge base, facilitating a divide-and-conquer approach.

This enables the building of a multi-agent RAG platform capable of consistently producing high-quality content.

- Visualisation and Ease of Use

The aevatar.ai dashboard provides low-code/no-code visual orchestration tools, helping users easily design and monitor complex workflows. This is a significant step in lowering technical barriers so that just about anyone can quickly get started in creating and personalising AI agents.

- Security and Scalability

Based on the cloud-native DevSecOps and microservice architecture, aevatar.ai provides elastic scaling and high concurrency processing capabilities, while ensuring system security and compliance.

Based on the cloud-native DevSecOps and microservice architecture, aevatar.ai provides elastic scaling and high concurrency processing capabilities, while ensuring system security and compliance.

With that, it meets enterprise-level user requirements.